(Updated 4/22/2010 at 2:44 p.m.)

(Updated 4/22/2010 at 2:44 p.m.)

IBM officially announced the HX5 on Tuesday, so I’m going to take the liberty to dig a little deeper in providing details on the blade server. I previously provided a high-level overview of the blade server on this post, so now I want to get a little more technical, courtesy of IBM. It is my understanding that the “general availability” of this server will be in the mid-June time frame, however that is subject to change without notice.

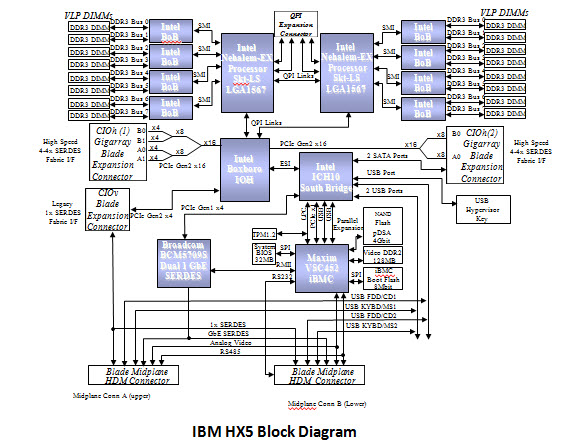

Block Diagram

Below is the details of the actual block diagram of the HX5. There’s no secrets here, as they’re using the Intel Xeon 6500 and 7500 chipsets that I blogged about previously.

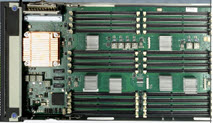

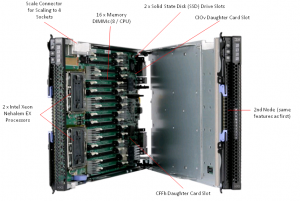

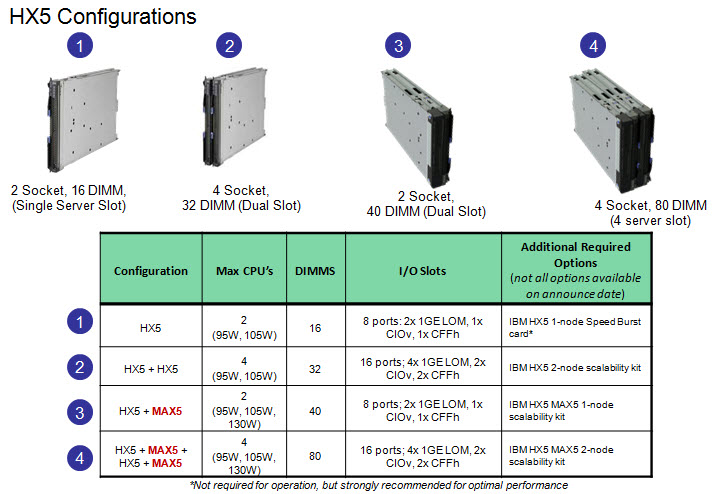

As previously mentioned, the value that the IBM HX5 blade server brings is scalability. A user has the ability to buy a single blade server with 2 CPUs and 16 DIMMs, then expand it to 40 DIMMs with a 24 DIMM MAX 5 memory blade. OR, in the near future, a user could combine 2 x HX5 servers to make a 4 CPU server with 32 DIMMs, or add a MAX5 memory DIMM to each server and have a 4 CPU server with 80 DIMMs.

The diagrams below provide a more technical view of the the HX5 + MAX5 configs. Note, the “sideplanes” referenced below are actualy the “scale connector“.  As a reminder, this connector will physically connect 2 HX5 servers on the tops of the servers, allowing the internal communications to extend to each others nodes. The easiest way to think of this is like a Lego . It will allow a HX5 or a MAX5 to be connected together. There will be a 2 connector, a 3 connector and a 4 connector offering.

As a reminder, this connector will physically connect 2 HX5 servers on the tops of the servers, allowing the internal communications to extend to each others nodes. The easiest way to think of this is like a Lego . It will allow a HX5 or a MAX5 to be connected together. There will be a 2 connector, a 3 connector and a 4 connector offering.

(Updated) Since the original posting, IBM released the “eX5 Porfolio Technical Overview: IBM System x3850 X5 and IBM BladeCenter HX5” so I encourage you to go download it and give it a good read. David’s Redbook team always does a great job answering all the questions you might have about an IBM server inside those documents.

If there’s something about the IBM BladeCenter HX5 you want to know about, let me know in the comments below and I’ll see what I can do.

Thanks for reading!