I recently had some discussions with a customer looking to connect a Dell EMC PowerEdge M1000e to a Cisco Nexus and I was quite surprised at the number of resources available to assist in the project. Below you will find links to the documents I found with the hope it will help you out in the near future.

Tag Archives: Nexus

Blade Server Networking Options

If you are new to blade servers, you may find there are quite a few options to consider in regards to managing your Ethernet traffic. Some vendors promote the traditional integrated switching, while others promote extending the fabric to a Top of Rack (ToR) device. Each method has its own benefits, so let me explain what those are. Before I get started, although I work for Dell, this blog post is designed to be an un-biased review of the network options available for many blade server vendors.

Dell Announces Converged 10GbE Switch for M1000e

Updated 1/27/2011

Dell quietly announced the addition of a 10 Gigabit Ethernet (10GbE) switch module, known as the M8428-k. This blade module advertises 600 ns low-latency, wire-speed, 10GbE performance, Fibre Channel over Ethernet (FCoE) switching, and low-latency 8 Gb Fibre Channel (FC) switching and connectivity. Continue reading

More HP and IBM Blade Rumours

I wanted to post a few more rumours before I head out to HP in Houston for “HP Blades and Infrastructure Software Tech Day 2010” so it’s not to appear that I got the info from HP. NOTE: this is purely speculation, I have no definitive information from HP so this may be false info.

First off – the HP Rumour:

I’ve caught wind of a secret that may be truth, may be fiction, but I hope to find out for sure from the HP blade team in Houston. The rumour is that HP’s development team currently has a Cisco Nexus Blade Switch Module for the HP BladeSystem in their lab, and they are currently testing it out.

Now, this seems far fetched, especially with the news of Cisco severing partner ties with HP, however, it seems that news tidbit was talking only about products sold with the HP label, but made by Cisco (OEM.) HP will continue to sell Cisco Catalyst switches for the HP BladeSystem and even Cisco branded Nexus switches with HP part numbers (see this HP site for details.) I have some doubt about this rumour of a Cisco Nexus Switch that would go inside the HP BladeSystem simply because I am 99% sure that HP is announcing a Flex10 type of BladeSystem switch that will allow converged traffic to be split out, with the Ethernet traffic going to the Ethernet fabric and the Fibre traffic going to the Fibre fabric (check out this rumour blog I posted a few days ago for details.) Guess only time will tell.

The IBM Rumour:

I posted a few days ago a rumour blog that discusses the rumour of HP’s next generation adding Converged Network Adapters (CNA) to the motherboard on the blades (in lieu of the 1GB or Flex10 NICs), well, now I’ve uncovered a rumour that IBM is planning on following later this year with blades that will also have CNA’s on the motherboard. This is huge! Let me explain why.

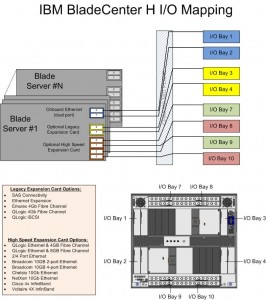

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

This means that IF IBM comes out with a blade server that has a CNA on the motherboard, either:

a) the blade’s CNA will have to route to I/O Bays 7-10

OR

b) IBM’s going to have to come out with a new BladeCenter chassis that allows the high speed converged traffic from the CNAs to connect to a high speed switch module in Bays 1 and 2.

So let’s think about this. If IBM (and HP for that matter) does put CNA’s on the motherboard, is there a need for additional mezzanine/daughter cards? This means the blade servers could have more real estate for memory, or more processors. If there’s no extra daughter cards, then there’s no need for additional I/O module bays. This means the blade chassis could be smaller and use less power – something every customer would like to have.

I can really see the blade market moving toward this type of design (not surprising very similar to Cisco’s UCS design) – one where only a pair of redundant “modules” are needed to split converged traffic to their respective fabrics. Maybe it’s all a pipe dream, but when it comes true in 18 months, you can say you heard it here first.

Thanks for reading. Let me know your thoughts – leave your comments below.

(UPDATED) Officially Announced: IBM’s Nexus 4000 Switch: 4001I (PART 2)

I’ve gotten a lot of response from my first post, “REVEALED: IBM’s Nexus 4000 Switch: 4001I” and more information is coming out quickly so I decided to post a part 2. IBM officially announced the switch on October 20, 2009, so here’s some additional information:

- The Nexus 4001I Switch for the IBM BladeCenter is part # 46M6071 and has a list price of $12,999 (U.S.) each

- In order for the Nexus 4001I switch for the IBM BladeCenter to connect to an upstream FCoE switch, an additional software purchase is required. This item will be part # strong>49Y9983, “Software Upgrade License for Cisco Nexus 4001I.” This license upgrade allows for the Nexus 4001I to handle FCoE traffic. It has a U.S. list price of $3,899

- The Cisco Nexus 4001I for the IBM BladeCenter will be compatible with the following blade server expansion cards

- 2/4 Port Ethernet Expansion Card, part # 44W4479

- NetXen 10Gb Ethernet Expansion Card, part # 39Y9271

- Broadcom 2-port 10Gb Ethernet Exp. Card, part # 44W4466

- Broadcom 4-port 10Gb Ethernet Exp. Card, part # 44W4465

- Broadcom 10 Gb Gen 2 2-port Ethernet Exp. Card, part # 46M6168

- Broadcom 10 Gb Gen 2 4-port Ethernet Exp. Card, part # 46M6164

- QLogic 2-port 10Gb Converged Network Adapter, part # 42C1830

- (UPDATED 10/22/09) The newly announced Emulex Virtual Adapter WILL NOT work with the Nexus 4001I IN VIRTUAL NIC (vNIC) mode. It will work in pNIC mode according to IBM.

The Cisco Nexus 4001I switch for the IBM BladeCenter is a new approach to getting converged network traffic. As I posted a few weeks ago in my post, “How IBM’s BladeCenter works with  Cisco Nexus 5000” before the Nexus 4001I was announced, in order to get your blade servers to communicate with a Cisco Nexus 5000, you had to use a CNA,and a 10Gb Pass-Thru Module as shown on the left. The pass-thru module used in that solution requires for a direct connection to be made from the pass-thru module to the Cisco Nexus 5000 for every blade server that requires connectivity. This means for 14 blade servers, 14 connections are required to the Cisco Nexus 5000. This solution definitely works – it just eats up 14 Nexus 5000 ports. At $4,999 list (U.S.), plus the cost of the GBICs, the “pass-thru” scenario may be a good solution for budget conscious environments.

Cisco Nexus 5000” before the Nexus 4001I was announced, in order to get your blade servers to communicate with a Cisco Nexus 5000, you had to use a CNA,and a 10Gb Pass-Thru Module as shown on the left. The pass-thru module used in that solution requires for a direct connection to be made from the pass-thru module to the Cisco Nexus 5000 for every blade server that requires connectivity. This means for 14 blade servers, 14 connections are required to the Cisco Nexus 5000. This solution definitely works – it just eats up 14 Nexus 5000 ports. At $4,999 list (U.S.), plus the cost of the GBICs, the “pass-thru” scenario may be a good solution for budget conscious environments.

In comparison, with the IBM Nexus 4001I switch, we now can have as few as 1 uplink to the Cisco Nexus 5000 from the Nexus 4001I switch. This allows you to have more open ports on the Cisco Nexus 5000 for connections to other IBM Bladecenters with Nexus 4001I switches, or to allow connectivity from your rack based servers with CNAs.

Bottom line: the Cisco Nexus 4001I switch will reduce your port requirements on your Cisco Nexus 5000 or Nexus 7000 switch by allowing up to 14 servers to uplink via 1 port on the Nexus 4001I.

For more details on the IBM Nexus 4001I switch, I encourage you to go to the newly released IBM Redbook for the Nexus 4001I Switch.

IBM Announces Emulex Virtual Fabric Adapter for BladeCenter…So?

Emulex and IBM announced today the availability of a new Emulex expansion card for blade servers that allows for up to 8 virtual nics to be assigned for each physical NIC. The “Emulex Virtual Fabric Adapter for IBM BladeCenter (IBM part # 49Y4235)” is a CFF-H expansion card is based on industry-standard PCIe architecture and can operate as a “Virtual NIC Fabric Adapter” or as a dual-port 10 Gb or 1 Gb Ethernet card.

Emulex and IBM announced today the availability of a new Emulex expansion card for blade servers that allows for up to 8 virtual nics to be assigned for each physical NIC. The “Emulex Virtual Fabric Adapter for IBM BladeCenter (IBM part # 49Y4235)” is a CFF-H expansion card is based on industry-standard PCIe architecture and can operate as a “Virtual NIC Fabric Adapter” or as a dual-port 10 Gb or 1 Gb Ethernet card.

When operating as a Virtual NIC (vNIC) each of the 2 physical ports appear to the blade server as 4 virtual NICs for a total of 8 virtual NICs per card. According to IBM, the default bandwidth for each vNIC is 2.5 Gbps. The cool feature about this mode is that the bandwidth for each vNIC can be configured from 100 Mbps to 10 Gbps, up to a maximum of 10 Gb per virtual port. The one catch with this mode is that it ONLY operates with the BNT Virtual Fabric 10Gb Switch Module, which provides independent control for each vNIC. This means no connection to Cisco Nexus…yet. According to Emulex, firmware updates coming later (Q1 2010??) will allow for this adapter to be able to handle FCoE and iSCSI as a feature upgrade. Not sure if that means compatibility with Cisco Nexus 5000 or not. We’ll have to wait and see.

When used as a normal Ethernet Adapter (10Gb or 1Gb), aka “pNIC mode“, the card can is viewed as a standard 10 Gbps or 1 Gbps 2-port Ethernet expansion card. The big difference here is that it will work with any available 10 Gb switch or 10 Gb pass-thru module installed in I/O module bays 7 and 9.

So What?

I’ve known about this adapter since VMworld, but I haven’t blogged about it because I just don’t see a lot of value. HP has had this functionality for over a year now in their VirtualConnect Flex-10 offering so this technology is nothing new. Yes, it would be nice to set up a NIC in VMware ESX that only uses 200MB of a pipe, but what’s the difference in having a fake NIC that “thinks” he’s only able to use 200MB vs a big fat 10Gb pipe for all of your I/O traffic. I’m just not sure, but am open to any comments or thoughts.

legalization of cannabis

beth moore blog

charcoal grill

dell coupon code

cervical cancer symptoms

How IBM's BladeCenter works with Cisco Nexus 5000

Other than Cisco’s UCS offering, IBM is currently the only blade vendor who offers a Converged Network Adapter (CNA) for the blade server. The 2 port CNA sits on the server in a PCI express slot and is mapped to high speed bays with CNA port #1 going to High Speed Bay #7 and CNA port #2 going to High Speed Bay #9. Here’s an overview of the IBM BladeCenter H I/O Architecture (click to open large image:)

Since the CNAs are only switched to I/O Bays 7 and 9, those are the only bays that require a “switch” for the converged traffic to leave the chassis. At this time, the only option to get the converged traffic out of the IBM BladeCenter H is via a 10Gb “pass-thru” module. A pass-thru module is not a switch – it just passes the signal through to the next layer, in this case the Cisco Nexus 5000.

10 Gb Ethernet Pass-thru Module for IBM BladeCenter

The pass-thru module is relatively inexpensive, however it requires a connection to the Nexus 5000 for every server that has a CNA installed. As a reminder, the IBM BladeCenter H can hold up to 14 servers with CNAs installed so that would require 14 of the 20 ports on a Nexus 5010. This is a small cost to pay, however to gain the 80% efficiency that 10Gb Datacenter Ethernet (or Converged Enhanced Ethernet) offers. The overall architecture for the IBM Blade Server with CNA + IBM BladeCenter H + Cisco Nexus 5000 would look like this (click to open larger image:)

Hopefully when IBM announces their Cisco Nexus 4000 switch for the IBM BladeCenter H later this month, it will provide connectivity to CNAs on the IBM Blade server and it will help consolidate the amount of connections required to the Cisco Nexus 5000 from 14 to perhaps 6 connections ;)

Cisco Announces Nexus 4000 Switch for Blade Chassis

Cisco is announcing today the release of the Nexus 4000 switch. It will be designed to work in “other” blade vendors’ chassis, although they aren’t announcing which blade vendors. My gut is that Dell and IBM will OEM it, but HP will stick with their ProCurve line announced a few weeks ago. Here’s what I know about the Nexus 4000 switch:

1) It will aggregate 1GB links to 10Gb uplink. To me, this means that it will not be compatible with Converged Network Adapters (CNAs). From this description, it seems to be just the Cisco Nexus 2000 in a blade form factor. It’s simply a “fabric extender” allowing all of the traffic to flow into the Nexus 5000 Switch.

2) It will run on the Nexus O/S (NX-OS) This is key because it allows users to have a seamless environment for their server and their Nexus switch infrastructure.

3) Cisco Nexus 4000 will provide “cost effective transition from multiple 1GbE links to a lossless 10GbE for virtualized environments” This statement confuses me. Does it mean that the Cisco Nexus 4000 switch will be capable of working with 1Gb NICs as well as 10Gb CNAs, or is it just stating that the traditional 1Gb NICs will be able to connect into a lossless unified fabric??

Cisco is having a live broadcast at 10 am PST today, but I just reviewed the slide deck and they talk at a VERY high level on this new announcement. I suppose maybe they are going to let each vendor (Dell and IBM) provide details once they officially announce their switches. When they do, I’ll post details here.