Dell appears to be first to the market today with complete details on their Nehalem EX blade server, the PowerEdge M910. Based on the Nehalem EX technology (aka Intel Xeon 7500 Chipset), the server offers quite a lot of horsepower in a small, full-height blade server footprint.

Dell appears to be first to the market today with complete details on their Nehalem EX blade server, the PowerEdge M910. Based on the Nehalem EX technology (aka Intel Xeon 7500 Chipset), the server offers quite a lot of horsepower in a small, full-height blade server footprint.

Some details about the server:

- uses Intel Xeon 7500 or 6500 CPUs

- has support for up to 512GB using 32 x 16 DIMMs

- comes standard two embedded Broadcom NetExtreme II Dual Port 5709S Gigabit Ethernet NICs with failover and load balancing.

- has two 2.5″ Hot-Swappable SAS/Solid State Drives

- 3 4 available I/O mezzanine card slots

- comes with a Matrox G200eW w/ 8MB memory standard

- can function on 2 CPUs with access to all 32 DIMM slots

Dell (finally) Offers Some Innovation

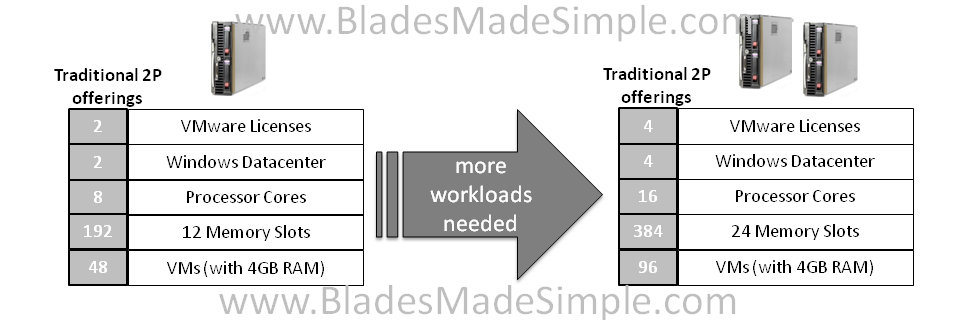

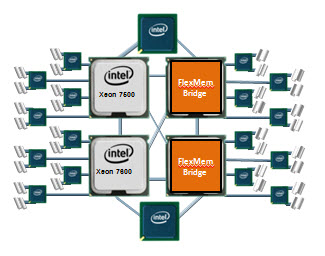

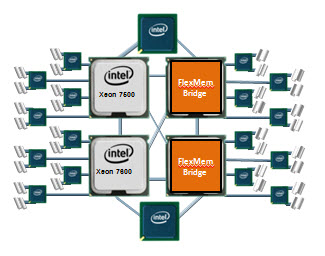

I commented a few weeks ago that Dell and innovate were rarely used in the same sentence, however with today’s announcement, I’ll have to retract that statement. Before I elaborate on what I’m referring to, let me do some quick education. The design of the Nehalem architecture allows for each processor (CPU) to have access to a dedicated bank of memory along with its own memory controller. The only downside to this is that if a CPU is not installed, the attached memory banks are not useable. THIS is where Dell is offering some innovation. Today Dell announced the “FlexMem Bridge” technology. This technology is simple in concept as it allows for the memory of a CPU socket that is not populated to still be used. In essence, Dell’s using technology that bridges the memory banks across un-populated CPU slots to the rest of the server’s populated CPUs.  With this technology, a user could start of with only 2 CPUs and still have access to 32 memory DIMMs. Then, over time, if more CPUs are needed, they simply remove the FlexMem Bridge adapters from the CPU sockets then replace with CPUs – now they would have a 4 CPU x 32 DIMM blade server.

With this technology, a user could start of with only 2 CPUs and still have access to 32 memory DIMMs. Then, over time, if more CPUs are needed, they simply remove the FlexMem Bridge adapters from the CPU sockets then replace with CPUs – now they would have a 4 CPU x 32 DIMM blade server.

Congrats to Dell. Very cool idea. The Dell PowerEdge M910 is available to order today from the Dell.com website.

Let me know what you guys think.