IBM officially announced their new blade, the HS23 blade server, and it comes with some improvement.

Tag Archives: Emulex

Shared I/O – The Future of Blade Servers?

Last week, Blade.org invited me to their 3rd Annual Technology Symposium – an online event with speakers from APC, Blade Network Technologies, Emulex, IBM, NetApp, Qlogic and Virtensys. Blade.org is a collaborative organization and developer community focused on accelerating the development and adoption of open blade server platforms. This year’s Symposium focused on “the dynamic data center of the future”. While there were many interesting topics (check out the replay here), the one that appealed to me most was “Shared I/O” by Alex Nicolson, VP and CTO of Emulex. Let me explain why. Continue reading

Virtual I/O on IBM BladeCenter (IBM Virtual Fabric Adapter by Emulex)

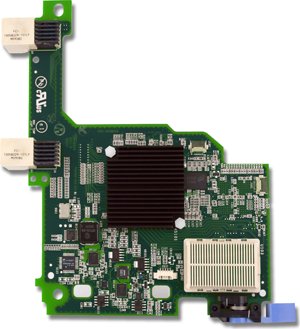

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I blogged about it in October and that was because, I didn't get it. I didn't get what the big deal was with being able to take a 10Gb pipe and allow you to carve it up into 4 "virtual NICs". HP's been doing this for a long time with their FlexNICs (check out VirtualKennth's blog for a great detail on this technology) so I didn't see the value in what IBM and Emulex was trying to do. But now I understand. Before I get into this, let me remind you of what this adapter is. The Emulex Virtual Fabric Adapter (CFFh) for IBM BladeCenter is a dual-port 10 Gb Ethernet card that supports 1 Gbps or 10 Gbps traffic, or up to eight virtual NIC devices.

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I blogged about it in October and that was because, I didn't get it. I didn't get what the big deal was with being able to take a 10Gb pipe and allow you to carve it up into 4 "virtual NICs". HP's been doing this for a long time with their FlexNICs (check out VirtualKennth's blog for a great detail on this technology) so I didn't see the value in what IBM and Emulex was trying to do. But now I understand. Before I get into this, let me remind you of what this adapter is. The Emulex Virtual Fabric Adapter (CFFh) for IBM BladeCenter is a dual-port 10 Gb Ethernet card that supports 1 Gbps or 10 Gbps traffic, or up to eight virtual NIC devices.

This adapter hopes to address three key I/O issues:

1.Need for more than two ports per server, with 6-8 recommended for virtualization

2.Need for more than 1Gb bandwidth, but can't support full 10Gb today

3.Need to prepare for network convergence in the future

"1, 2, 3, 4"

I recently attended an IBM/Emulex partner event and Emulex presented a unique way to understand the value of the Emulex Virtual Fabric Adapter via the term, "1, 2, 3, 4" Let me explain:

"1" – Emulex uses a single chip architecture for these adapters. (As a non-I/O guy, I'm not sure of why this matters – I welcome your comments.)

"2" – Supports two platforms: rack and blade (Easy enough to understand, but this also emphasizes that a majority of the new IBM System x servers announced this week will have the Virtual Fabric Adapter "standard")

"3" – Emulex will have three product models for IBM (one for blade servers, one for the rack servers and one intergrated into the new eX5 servers)

"4" – There are four modes of operation:

-

Legacy 1Gb Ethernet

-

10Gb Ethernet

-

Fibre Channel over Ethernet (FCoE)…via software entitlement ($$)

-

iSCSI Hardware Acceleration…via software entitlement ($$)

This last part is the key to the reason I think this product could be of substantial value. The adapter enables a user to begin with traditional Ethernet, then grow into 10Gb, FCoE or iSCSI without any physical change – all they need to do is buy a license (for the FCoE or iSCSI).

Modes of operation

The expansion card has two modes of operation: standard physical port mode (pNIC) and virtual NIC (vNIC) mode.

In vNIC mode, each physical port appears to the blade server as four virtual NIC with a default bandwidth of 2.5 Gbps per vNIC. Bandwidth for each vNIC can be configured from 100 Mbps to 10 Gbps, up to a maximum of 10 Gb per virtual port.

In pNIC mode, the expansion card can operate as a standard 10 Gbps or 1 Gbps 2-port Ethernet expansion card.

As previously mentioned, a future entitlement purchase will allow for up to two FCoE ports or two iSCSI ports. The FCoE and iSCSI ports can be used in combination with up to six Ethernet ports in vNIC mode, up to a maximum of eight total virtual ports.

Mode IBM Switch Compatibility

vNIC – works with BNT Virtual Fabric Switch

pNIC – works with BNT, IBM Pass-Thru, Cisco Nexus

FCoE– BNT or Cisco Nexus

iSCSI Acceleration – all IBM 10GbE switches

I really think the "one card can do all" concept works really well for the IBM BladeCenter design, and I think we'll start seeing more and more customers move toward this single card concept.

Comparison to HP Flex-10

I'll be the first to admit, I'm not a network or storage guy, so I'm not really qualified to compare this offering to HP's Flex-10, however IBM has created a very clever video that does some comparisons. Take a few minutes to watch and let me know your thoughts.

7 habits of highly effective people

pet food express

cartoon network video

arnold chiari malformation

category 1 hurricane

(UPDATED) Officially Announced: IBM’s Nexus 4000 Switch: 4001I (PART 2)

I’ve gotten a lot of response from my first post, “REVEALED: IBM’s Nexus 4000 Switch: 4001I” and more information is coming out quickly so I decided to post a part 2. IBM officially announced the switch on October 20, 2009, so here’s some additional information:

- The Nexus 4001I Switch for the IBM BladeCenter is part # 46M6071 and has a list price of $12,999 (U.S.) each

- In order for the Nexus 4001I switch for the IBM BladeCenter to connect to an upstream FCoE switch, an additional software purchase is required. This item will be part # strong>49Y9983, “Software Upgrade License for Cisco Nexus 4001I.” This license upgrade allows for the Nexus 4001I to handle FCoE traffic. It has a U.S. list price of $3,899

- The Cisco Nexus 4001I for the IBM BladeCenter will be compatible with the following blade server expansion cards

- 2/4 Port Ethernet Expansion Card, part # 44W4479

- NetXen 10Gb Ethernet Expansion Card, part # 39Y9271

- Broadcom 2-port 10Gb Ethernet Exp. Card, part # 44W4466

- Broadcom 4-port 10Gb Ethernet Exp. Card, part # 44W4465

- Broadcom 10 Gb Gen 2 2-port Ethernet Exp. Card, part # 46M6168

- Broadcom 10 Gb Gen 2 4-port Ethernet Exp. Card, part # 46M6164

- QLogic 2-port 10Gb Converged Network Adapter, part # 42C1830

- (UPDATED 10/22/09) The newly announced Emulex Virtual Adapter WILL NOT work with the Nexus 4001I IN VIRTUAL NIC (vNIC) mode. It will work in pNIC mode according to IBM.

The Cisco Nexus 4001I switch for the IBM BladeCenter is a new approach to getting converged network traffic. As I posted a few weeks ago in my post, “How IBM’s BladeCenter works with  Cisco Nexus 5000” before the Nexus 4001I was announced, in order to get your blade servers to communicate with a Cisco Nexus 5000, you had to use a CNA,and a 10Gb Pass-Thru Module as shown on the left. The pass-thru module used in that solution requires for a direct connection to be made from the pass-thru module to the Cisco Nexus 5000 for every blade server that requires connectivity. This means for 14 blade servers, 14 connections are required to the Cisco Nexus 5000. This solution definitely works – it just eats up 14 Nexus 5000 ports. At $4,999 list (U.S.), plus the cost of the GBICs, the “pass-thru” scenario may be a good solution for budget conscious environments.

Cisco Nexus 5000” before the Nexus 4001I was announced, in order to get your blade servers to communicate with a Cisco Nexus 5000, you had to use a CNA,and a 10Gb Pass-Thru Module as shown on the left. The pass-thru module used in that solution requires for a direct connection to be made from the pass-thru module to the Cisco Nexus 5000 for every blade server that requires connectivity. This means for 14 blade servers, 14 connections are required to the Cisco Nexus 5000. This solution definitely works – it just eats up 14 Nexus 5000 ports. At $4,999 list (U.S.), plus the cost of the GBICs, the “pass-thru” scenario may be a good solution for budget conscious environments.

In comparison, with the IBM Nexus 4001I switch, we now can have as few as 1 uplink to the Cisco Nexus 5000 from the Nexus 4001I switch. This allows you to have more open ports on the Cisco Nexus 5000 for connections to other IBM Bladecenters with Nexus 4001I switches, or to allow connectivity from your rack based servers with CNAs.

Bottom line: the Cisco Nexus 4001I switch will reduce your port requirements on your Cisco Nexus 5000 or Nexus 7000 switch by allowing up to 14 servers to uplink via 1 port on the Nexus 4001I.

For more details on the IBM Nexus 4001I switch, I encourage you to go to the newly released IBM Redbook for the Nexus 4001I Switch.

IBM Announces Emulex Virtual Fabric Adapter for BladeCenter…So?

Emulex and IBM announced today the availability of a new Emulex expansion card for blade servers that allows for up to 8 virtual nics to be assigned for each physical NIC. The “Emulex Virtual Fabric Adapter for IBM BladeCenter (IBM part # 49Y4235)” is a CFF-H expansion card is based on industry-standard PCIe architecture and can operate as a “Virtual NIC Fabric Adapter” or as a dual-port 10 Gb or 1 Gb Ethernet card.

Emulex and IBM announced today the availability of a new Emulex expansion card for blade servers that allows for up to 8 virtual nics to be assigned for each physical NIC. The “Emulex Virtual Fabric Adapter for IBM BladeCenter (IBM part # 49Y4235)” is a CFF-H expansion card is based on industry-standard PCIe architecture and can operate as a “Virtual NIC Fabric Adapter” or as a dual-port 10 Gb or 1 Gb Ethernet card.

When operating as a Virtual NIC (vNIC) each of the 2 physical ports appear to the blade server as 4 virtual NICs for a total of 8 virtual NICs per card. According to IBM, the default bandwidth for each vNIC is 2.5 Gbps. The cool feature about this mode is that the bandwidth for each vNIC can be configured from 100 Mbps to 10 Gbps, up to a maximum of 10 Gb per virtual port. The one catch with this mode is that it ONLY operates with the BNT Virtual Fabric 10Gb Switch Module, which provides independent control for each vNIC. This means no connection to Cisco Nexus…yet. According to Emulex, firmware updates coming later (Q1 2010??) will allow for this adapter to be able to handle FCoE and iSCSI as a feature upgrade. Not sure if that means compatibility with Cisco Nexus 5000 or not. We’ll have to wait and see.

When used as a normal Ethernet Adapter (10Gb or 1Gb), aka “pNIC mode“, the card can is viewed as a standard 10 Gbps or 1 Gbps 2-port Ethernet expansion card. The big difference here is that it will work with any available 10 Gb switch or 10 Gb pass-thru module installed in I/O module bays 7 and 9.

So What?

I’ve known about this adapter since VMworld, but I haven’t blogged about it because I just don’t see a lot of value. HP has had this functionality for over a year now in their VirtualConnect Flex-10 offering so this technology is nothing new. Yes, it would be nice to set up a NIC in VMware ESX that only uses 200MB of a pipe, but what’s the difference in having a fake NIC that “thinks” he’s only able to use 200MB vs a big fat 10Gb pipe for all of your I/O traffic. I’m just not sure, but am open to any comments or thoughts.

legalization of cannabis

beth moore blog

charcoal grill

dell coupon code

cervical cancer symptoms