In this day and age, 99% of datacenter environments are virtualized, however a fraction of those environments are using virtualized storage, aka “vSAN”. I thought it would be interesting to take a look at the full 1000+ page list of VMware vSAN Ready Nodes and deduct all non blade server systems. In this post, I’ll be highlighting only blade server vSAN Ready Nodes. Continue reading

Tag Archives: Cisco UCS

Cisco Announces New UCS Fabric Interconnect

This month Cisco announced a new addition to the UCS family – a mini Fabric Interconnect, called the UCS 6324 Fabric Interconnect, which unlike the ones before it plugs directly into the UCS 5108 chassis. With connectivity for up to 15 servers (8 blade servers and up to 7 direct-connect rack servers), the 6324 is geared toward small environments.

The Secret to How Cisco Took the #1 Blade Server Spot

IDC and Cisco confirmed this week that Cisco has taken the #1 x86 blade server spot in North America for Q1 in 2014 with 40% revenue market share according to a recent CRN report. This is quite an accomplishment especially since Cisco has only been reporting their numbers for 3 years. So you might wonder – what is the secret to Cisco’s success? I have a few ideas (right or wrong) that might shed some light on why Cisco is having success in their blade server business.

Are We Finally Getting Market Share Numbers for Cisco UCS?

If you’ve been reading this blog over the past six months, you’ll know that I’ve continued to bust on Cisco for not providing market share numbers after selling their UCS product line for two years. I believe the wait is now over.

This Day in History: "HP Claims Cisco UCS Will Be Dead in 1 Year"

“A year from now the difference will be (Cisco) UCS (Unified Compute System) is dead and we have had phenomenal market share growth in the networking space…And customers are thrilled and partners are making a lot of money.” – Randy Seidl, VP of the Americas, Enterprise Servers Storage and Networking, HP (April 26, 2010)

This was a quote found in CRN’s article a year ago, today, from Randy Seidl, HP’s senior vice president of the Americas, Enterprise Servers Storage and Networking, who was tasked in leading the charge against Cisco. Needless to say, it’s a year later, and Cisco UCS is still around but with much question around how much market share they own since they’ve yet to release market data to IDC or Gartner.

What Cisco Has to Do to Win the Blade Server Market

Over the past several months, there has been a lot of discussions about how 2011 is the year Cisco will become a leader in the blade server space. There’s no doubt that there are a lot of customers who have moved to UCS, but in reality there are a few other things that Cisco will need to do to win the top spot. Today I’m going to discuss a few of these things. Continue reading

(UPDATED) The Best Blade Server Option Is…[Part 1 – A Look at Cisco]

IBM BladeCenter H vs Cisco UCS

(From the Archives – September 2009)

News Flash: Cisco is now selling servers!

Okay – perhaps this isn’t news anymore, but the reality is Cisco has been getting a lot of press lately – from their overwhelming presence at VMworld 2009 to their ongoing cat fight with HP. Since I work for a Solutions Provider that sells HP, IBM and now Cisco blade servers, I figured it might be good to “try” and put together a comparison between the Cisco and IBM. Why IBM? Simply because at this time, they are the only blade vendor who offers a Converged Network Adapter (CNA) that will work with the Cisco Nexus 5000 line. At this time Dell and HP do not offer a CNA for their blade server line so IBM is the closest we can come to Cisco’s offering. I don’t plan on spending time educating you on blades, because if you are interested in this topic, you’ve probably already done your homework. My goal with this post is to show the pros (+) and cons (-) that each vendor has with their blade offering – based on my personal, neutral observation

Chassis Variety / Choice: winner in this category is IBM.

IBM currently offers 5 types of blade chassis: BladeCenter S, BladeCenter E, BladeCenter H, BladeCenter T and BladeCenter HT. Each of the IBM blade chassis have unique offerings, such as the BladeCenter S is designed for small or remote offices with local storage capabilities, whereas the BladeCenter HT is designed for Telco environments with options for NEBS compliant features including DC power. At this time, Cisco only offers a single blade chassis offering (the 5808).

IBM BladeCenter H

Cisco UCS 5108

Server Density and Server Offerings: winner in this category is IBM. IBM’s BladeCenter E and BladeCenter H chassis offer up to 14 blade servers with servers using Intel, AMD and Power PC processors. In comparison, Cisco’s 5808 chassis offers up to 8 server slots and currently offers servers with Intel Xeon processors. As an honorable mention Cisco does offer a “full width” blade (Cisco UCS B250 server) that provides up to 384Gb of RAM in a single blade server across 48 memory slots offering up the ability to get to higher memory at a lower price point.

Management / Scalability: winner in this category is Cisco.

This is where Cisco is changing the blade server game. The traditional blade server infrastructure calls for each blade chassis to have its own dedicated management module to gain access to the chassis’ environmentals and to remote control the blade servers. As you grow your blade chassis environment, you begin to manage multiple servers. Beyond the ease of managing , the management software that the Cisco 6100 series offers provides users with the ability to manage server service profiles that consists of things like MAC Addresses, NIC Firmware, BIOS Firmware, WWN Addresses, HBA Firmware (just to name a few.)

Cisco UCS 6100 Series Fabric Interconnect

With Cisco’s UCS 6100 Series Fabric Interconnects, you are able to manage up to 40 blade chassis with a single pair of redundant UCS 6140XP (consisting of 40 ports.)

If you are familiar with the Cisco Nexus 5000 product, then understanding the role of the Cisco UCS 6100 Fabric Interconnect should be easy. The UCS 6100 Series Fabric Interconnect do for the Cisco UCS servers what Nexus does for other servers: unifies the fabric. HOWEVER, it’s important to note the UCS 6100 Series Fabric Interconnect is NOT a Cisco Nexus 5000. The UCS 6100 Series Fabric Interconnect is only compatible with the UCS servers.

Cisco UCS I/O Connectivity Diagram (UCS 5108 Chassis with 2 x 6120 Fabric Interconnects)

If you have other servers, with CNAs, then you’ll need to use the Cisco Nexus 5000.

The diagram on the right shows a single connection from the FEX to the UCS 6120XP, however the FEX has 4 uplinks, so if you want (need) more throughput, you can have it. This design provides each half-wide Cisco B200 server with the ability to have 2

CNA ports with redundant pathways. If you are satisified with using a single FEX connection per chassis, then you have the ability to scale up to 20 x blade chassis with a Cisco UCS 6120 Fabric Interconnect, or 40 chassis with the Cisco UCS 6140 Fabric Interconnect. As hinted in the previous section, the management software for the all connected UCS chassis resides in the redundant Cisco UCS 6100 Series Fabric Interconnects. This design offers a highly scaleable infrastructure that enables you to scale simply by dropping in a chassis and connecting the FEX to the 6100 switch. (Kind of like Lego blocks.)

On the flip side, while this architecture is simple, it’s also limited. There is currently no way to add additional I/O to an individual server. You get 2 x CNA ports per Cisco B200 server or 4 x CNA ports per Cisco B250 server.

As previously mentioned, IBM has a strategy that is VERY similar to the Cisco UCS strategy using the Cisco Nexus 5000 product line with pass-thru modules. IBM’s solution consists of:

-

IBM BladeCenter H Chassis

-

10Gb Pass-Thru Module

-

CNA’s on the blade servers

Even though IBM and Cisco designed the Cisco Nexus 4001i switch that integrates into the IBM BladeCenter H chassis, using a 10Gb pass-thru module “may” be the best option to get true DataCenter Ethernet (or Converged Enhanced Ethernet) from the server to the Nexus switch – especially for users looking for the lowest cost. The performance for the IBM solution should equal the Cisco UCS design, since it’s just passing the signal through, however the connectivity is going to be more with the IBM solution. Passing signals through means NO cable

BladeCenter H Diagram with Nexus 5010 (using 10Gb Passthru Modules)

consolidation – for every server you’re going to need a connection to the Nexus 5000. For a fully populated IBM BladeCenter H chassis, you’ll need 14 connections to the Cisco Nexus 5000. If you are using the Cisco 5010 (20 ports) you’ll eat up all but 6 ports. Add a 2nd IBM BladeCenter chassis and you’re buying more Cisco Nexus switches. Not quite the scaleable design that the Cisco UCS offers.

IBM also offers a 10Gb Ethernet Switch Option from BNT (Blade Networks) that will work with converged switches like the Nexus 5000, but at this time that upgrade is not available. Once it does become available, it would reduce the connectivity requirements down to a single cable, but, adding a switch between the blade chassis and the Nexus switch could bring additional management complications. Let me know your thoughts on this.

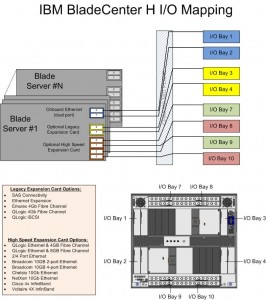

IBM’s BladeCenter H (BCH) does offer something that Cisco doesn’t – additional I/O expansion. Since this solution uses two of the high speed bays in the BCH, bays 1, 2, 3 & 4 remain available. Bays 1 & 2 are mapped to the onboard NICs on each server, and bays 3&4 are mapped to the 1st expansion card on each server. This means that 2 additional NICs and 2 additional HBAs (or NICs) could be added in conjunction with the 2 CNAs on each server. Based on this, IBM potentially offers more I/O scalability.

And the Winner Is…

It depends. I love the concept of the Cisco UCS platform. Servers are seen as processors and memory – building blocks that are centrally managed. Easy to scale, easy to size. However, is it for the average datacenter who only needs 5 servers with high I/O? Probably not. I see the Cisco UCS as a great platform for datacenters with more than 14 servers needing high I/O bandwidth (like a virtualization server or database server.) If your datacenter doesn’t need that type of scalability, then perhaps going with IBM’s BladeCenter solution is the choice for you. Going the IBM route gives you flexibility to choose from multiple processor types and gives you the ability to scale into a unified solution in the future. While ideal for scalability, the IBM solution is currently more complex and potentially more expensive than the Cisco UCS solution.

Let me know what you think. I welcome any comments.

maple grove community center

world population clock

isp speed test

breast cancer symptoms

home decorators coupon

More HP and IBM Blade Rumours

I wanted to post a few more rumours before I head out to HP in Houston for “HP Blades and Infrastructure Software Tech Day 2010” so it’s not to appear that I got the info from HP. NOTE: this is purely speculation, I have no definitive information from HP so this may be false info.

First off – the HP Rumour:

I’ve caught wind of a secret that may be truth, may be fiction, but I hope to find out for sure from the HP blade team in Houston. The rumour is that HP’s development team currently has a Cisco Nexus Blade Switch Module for the HP BladeSystem in their lab, and they are currently testing it out.

Now, this seems far fetched, especially with the news of Cisco severing partner ties with HP, however, it seems that news tidbit was talking only about products sold with the HP label, but made by Cisco (OEM.) HP will continue to sell Cisco Catalyst switches for the HP BladeSystem and even Cisco branded Nexus switches with HP part numbers (see this HP site for details.) I have some doubt about this rumour of a Cisco Nexus Switch that would go inside the HP BladeSystem simply because I am 99% sure that HP is announcing a Flex10 type of BladeSystem switch that will allow converged traffic to be split out, with the Ethernet traffic going to the Ethernet fabric and the Fibre traffic going to the Fibre fabric (check out this rumour blog I posted a few days ago for details.) Guess only time will tell.

The IBM Rumour:

I posted a few days ago a rumour blog that discusses the rumour of HP’s next generation adding Converged Network Adapters (CNA) to the motherboard on the blades (in lieu of the 1GB or Flex10 NICs), well, now I’ve uncovered a rumour that IBM is planning on following later this year with blades that will also have CNA’s on the motherboard. This is huge! Let me explain why.

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

This means that IF IBM comes out with a blade server that has a CNA on the motherboard, either:

a) the blade’s CNA will have to route to I/O Bays 7-10

OR

b) IBM’s going to have to come out with a new BladeCenter chassis that allows the high speed converged traffic from the CNAs to connect to a high speed switch module in Bays 1 and 2.

So let’s think about this. If IBM (and HP for that matter) does put CNA’s on the motherboard, is there a need for additional mezzanine/daughter cards? This means the blade servers could have more real estate for memory, or more processors. If there’s no extra daughter cards, then there’s no need for additional I/O module bays. This means the blade chassis could be smaller and use less power – something every customer would like to have.

I can really see the blade market moving toward this type of design (not surprising very similar to Cisco’s UCS design) – one where only a pair of redundant “modules” are needed to split converged traffic to their respective fabrics. Maybe it’s all a pipe dream, but when it comes true in 18 months, you can say you heard it here first.

Thanks for reading. Let me know your thoughts – leave your comments below.

10 Things That Cisco UCS Polices Can Do (That IBM, Dell or HP Can’t)

ViewYonder.com recently posted a great write up on some things that Cisco’s UCS can do that IBM, Dell or HP really can’t. You can go to ViewYonder.com to read the full article, but here are 10 things that Cisco’s UCS Polices do:

- Chassis Discovery – allows you to decide how many links you should use from the FEX (2104) to the FI (6100). This affects the path from blades to FI and the oversubscription rate. If you’ve cabled 4 I can just use 2 if you want, or even 1.

- MAC Aging – helps you manage your MAC table? This affects ability to scale, as bigger MAC tables need more management.

- Autoconfig – when you insert a blade, depending on its hardware config enables you to apply a specific template for you and put it in a organization automatically.

- Inheritence – when you insert a blade, allows you to automatically create a logical version (Service Profile) by coping the UUID, MAC, WWNs etc.

- vHBA Templates – helps you to determine how you want _every_ vmhba2 to look like (i.e. Fabric, VSAN, QoS, Pin to a border port)

- Dynamic vNICs – helps you determine how to distribute the VIFs on a VIC

- Host Firmware – enables you to determine what firmware to apply to the CNA, the HBA, HBA ROM, BIOS, LSI

- Scrub – provides you with the ability to wipe the local disks on association

- Server Pool Qualification – enables you to determine which hardware configurations live in which pool

- vNIC/vHBA Placement – helps you to determine how to distribute VIFs over one/two CNAs?

For more on this topic, visit Steve’s blog at ViewYonder.com. Nice job, Steve!