IBM once again is promoting is striving to increase market share by offering customers the chance to get a “free” IBM BladeCenter chassis. The last time they promoted a free chassis was in November, so this year they kicked in the promo effective July 5, 2011. The promotion is for a free chassis – without any purchase, however a chassis without any blades or switches is just a metal box. Regardless, this promotion is a great way to help offset some of the cost to implementation of your blade server project. Continue reading

IBM once again is promoting is striving to increase market share by offering customers the chance to get a “free” IBM BladeCenter chassis. The last time they promoted a free chassis was in November, so this year they kicked in the promo effective July 5, 2011. The promotion is for a free chassis – without any purchase, however a chassis without any blades or switches is just a metal box. Regardless, this promotion is a great way to help offset some of the cost to implementation of your blade server project. Continue reading

Tag Archives: Bladecenter H

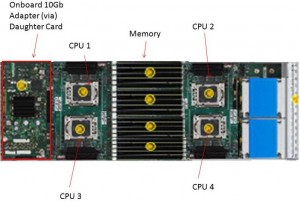

4 Socket Blade Servers Density: Vendor Comparison (2011)

Revised with corrections 3/1/2011 10:29 a.m. (EST)

Almost a year ago, I wrote an article highlighting the 4 socket blade server offerings. At that time, the offerings were very slim, but over the past 11 months, that blog post has received the most hits, so I figured it’s time to revise the article. In today’s post, I’ll review the 4 socket Intel and AMD blade servers that are currently on the market. Yes, I know I’ll have to revise this again in a few weeks, but I’ll cross that bridge when I get to it. Continue reading

IBM BladeCenter H Power Recommendation Reference Document

IBM’s BladeCenter H is rich with features, but requires planning before implementing, especially in regards to power. Over the years, I’ve worked with a lot of customers around the question of what power is needed for the BladeCenter H, so I created a reference document for my use and now I’m sharing it for you to use. I know IBM has a reference document but mine is focused only on the BladeCenter H and hopefully it is a bit simpler to use.

Link to my document: –> BladeCenter H Power Recommendations – BladesMadeSimple(tm)

(Adobe PDF, 440kb)

Hope this is useful for you. If you would like the actual Visio diagram I used for this, contact me via email with your info.

A Post from the Archive: “Cisco UCS vs IBM BladeCenter H”

It’s always fun to take a look at the past, so today I wanted to revisit my very first blog post. Titled, “Cisco UCS vs IBM BladeCenter H”, I focused on trying to compare Cisco’s blade technology with IBM’s. Was I successful or not – it’s up to you to decide. This article ranks at #7 in all-time hits, so people are definitely interested. Keep in mind this post has not been updated to reflect any changes in offering or technologies, it’s just being offered as a look back in time for your amusement. Here’s how the blog post began: Continue reading

Brocade FCoE Switch Module for IBM BladeCenter

4 Socket Blade Servers Density: Vendor Comparison

IMPORTANT NOTE – I updated this blog post on Feb. 28, 2011 with better details. To view the updated blog post, please go to:

https://bladesmadesimple.com/2011/02/4-socket-blade-servers-density-vendor-comparison-2011/

Original Post (March 10, 2010):

As the Intel Nehalem EX processor is a couple of weeks away, I wonder what impact it will have in the blade server market. I’ve been talking about IBM’s HX5 blade server for several months now, so it is very clear that the blade server vendors will be developing blades that will have some iteration of the Xeon 7500 processor. In fact, I’ve had several people confirm on Twitter that HP, Dell and even Cisco will be offering a 4 socket blade after Intel officially announces it on March 30. For today’s post, I wanted to take a look at how the 4 socket blade space will impact the overall capacity of a blade server environment. NOTE: this is purely speculation, I have no definitive information from any of these vendors that is not already public.

The Cisco UCS 5108 chassis holds 8 “half-width” B-200 blade servers or 4 “full-width” B-250 blade servers, so when we guess at what design Cisco will use for a 4 socket Intel Xeon 7500 (Nehalem EX) architecture, I have to place my bet on the full-width form factor. Why? Simply because there is more real estate. The Cisco B250 M1 blade server is known for its large memory capacity, however Cisco could sacrifice some of that extra memory space for a 4 socket, “Cisco B350“ blade. This would provide a bit of an issue for customers wanting to implement a complete rack full of these servers, as it would only allow for a total of 28 servers in a 42U rack (7 chassis x 4 servers per chassis.)

The Cisco UCS 5108 chassis holds 8 “half-width” B-200 blade servers or 4 “full-width” B-250 blade servers, so when we guess at what design Cisco will use for a 4 socket Intel Xeon 7500 (Nehalem EX) architecture, I have to place my bet on the full-width form factor. Why? Simply because there is more real estate. The Cisco B250 M1 blade server is known for its large memory capacity, however Cisco could sacrifice some of that extra memory space for a 4 socket, “Cisco B350“ blade. This would provide a bit of an issue for customers wanting to implement a complete rack full of these servers, as it would only allow for a total of 28 servers in a 42U rack (7 chassis x 4 servers per chassis.)

On the other hand, Cisco is in a unique position in that their half-width form factor also has extra real estate because they don’t have 2 daughter card slots like their competitors. Perhaps Cisco would create a half-width blade with 4 CPUs (a B300?) With a 42U rack, and using a half-width design, you would be able to get a maximum of 56 blade servers (7 chassis x 8 servers per chassis.)

Dell

The 10U M1000e chassis from Dell can currently handle 16 “half-height” blade servers or 8 “full height” blade servers. I don’t forsee any way that Dell would be able to put 4 CPUs into a half-height blade. There just isn’t enough room. To do this, they would have to sacrifice something, like memory slots or a daughter card expansion slot, which just doesn’t seem like it is worth it. Therefore, I predict that Dell’s 4 socket blade will be a full-height blade server, probably named a PowerEdge M910. With this assumption, you would be able to get 32 blade servers in a 42u rack (4 chassis x 8 blades.)

HP

HP

Similar to Dell, HP’s 10U BladeSystem c7000 chassis can currently handle 16 “half-height” blade servers or 8 “full height” blade servers. I don’t forsee any way that HP would be able to put 4 CPUs into a half-height blade. There just isn’t enough room. To do this, they would have to sacrifice something, like memory slots or a daughter card expansion slot, which just doesn’t seem like it is worth it. Therefore, I predict that HP’s 4 socket blade will be a full-height blade server, probably named a Proliant BL680 G7 (yes, they’ll skip G6.) With this assumption, you would be able to get 32 blade servers in a 42u rack (4 chassis x 8 blades.)

IBM

Finally, IBM’s 9U BladeCenter H chassis offers up 14 servers. IBM has one size server, called a “single wide.” IBM will also have the ability to combine servers together to form a “double-wide”, which is what is needed for the newly announced IBM BladeCenter HX5. A double-width blade server reduces the IBM BladeCenter’s capacity to 7 servers per chassis. This means that you would be able to put 28 x 4 socket IBM HX5 blade servers into a 42u rack (4 chassis x 7 servers each.)

Summary

In a tie for 1st place, at 32 blade servers in a 42u rack, Dell and HP would have the most blade server density based on their existing full-height blade server design. IBM and Cisco would come in at 3rd place with 28 blade servers in a 42u rack.. However IF Cisco (or HP and Dell for that matter) were able to magically re-design their half-height servers to hold 4 CPUs, then they would be able to take 1st place for blade density with 56 servers.

Yes, I know that there are slim chances that anyone would fill up a rack with 4 socket servers, however I thought this would be good comparison to make. What are your thoughts? Let me know in the comments below.

IBM’s New Approach to Ethernet/Fibre Traffic

Okay, I’ll be the first to admit when I’m wrong – or when I provide wrong information.

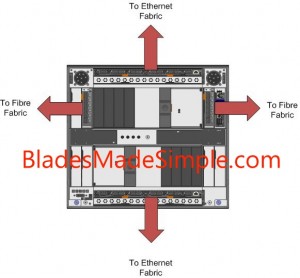

A few days ago, I commented that no one has yet offered the ability to split out Ethernet and Fibre traffic at the chassis level (as opposed to using a top of rack switch.) I quickly found out that I was wrong – IBM now has the ability to separate the Ethernet fabric and the Fibre fabric at the BladeCenter H, so if you are interested grab a cup of coffee and enjoy this read.

First a bit of background. The traditional method of providing Ethernet and Fibre I/O in a blade infrastructure was to integrate 6 Ethernet switches and 2 Fibre switches into the blade chassis, which provides 6 NICs and 2 Fibre HBAs per blade server. This is a costly method and it limits the scalability of a blade server.

A more conventional method that is becoming more popular is to converge the I/O traffic using a single converged network adapter (CNA) to carry the Ethernet and the Fibre traffic over a single 10Gb connection to a top of rack (TOR) switch which then sends the Ethernet traffic to the Ethernet fabric and the Fibre traffic to the Fibre fabric. This reduces the number of physical cables coming out of the blade chassis, offers higher bandwidth and reduces the overall switching costs. Up now, IBM offered two different methods to enable converged traffic:

Method 1: connect a pair of 10Gb Ethernet Pass-Thru modules into the blade chassis, add a CNA on each blade server, then connect the pass thru modules to a top of rack convergence switch from Brocade or Cisco. This method is the least expensive method, however since Pass-Thru modules are being used, a connection is required on the TOR convergence switch for every blade server being connected. This would mean a 14 blade infrastructure would eat up 14 ports on the convergence switch, potentially leaving the switch with very few available ports.

Method 1: connect a pair of 10Gb Ethernet Pass-Thru modules into the blade chassis, add a CNA on each blade server, then connect the pass thru modules to a top of rack convergence switch from Brocade or Cisco. This method is the least expensive method, however since Pass-Thru modules are being used, a connection is required on the TOR convergence switch for every blade server being connected. This would mean a 14 blade infrastructure would eat up 14 ports on the convergence switch, potentially leaving the switch with very few available ports.

Method #2: connect a pair of IBM Cisco Nexus 4001i switches, add a CNA on each server then connect the Nexus 4001i to a Cisco Nexus 5000 top of rack switch. This method enables you to use as few as 1 uplink connection from the blade chassis to the Nexus 5000 top of rack switch, however it is more costly and you have to invest into another Cisco switch.

server then connect the Nexus 4001i to a Cisco Nexus 5000 top of rack switch. This method enables you to use as few as 1 uplink connection from the blade chassis to the Nexus 5000 top of rack switch, however it is more costly and you have to invest into another Cisco switch.

The New Approach

A few weeks ago, IBM announced the “Qlogic Virtual Fabric Extension Module” – a device that fits into the IBM BladeCenter H and takes the the Fibre traffic from the CNA on a blade server and sends it to the Fibre fabric. This is HUGE! While having a top of rack convergence switch is helpful, you can now remove the need to have a top of rack switch because the I/O traffic is being split out into it’s respective fabrics at the BladeCenter H.

A few weeks ago, IBM announced the “Qlogic Virtual Fabric Extension Module” – a device that fits into the IBM BladeCenter H and takes the the Fibre traffic from the CNA on a blade server and sends it to the Fibre fabric. This is HUGE! While having a top of rack convergence switch is helpful, you can now remove the need to have a top of rack switch because the I/O traffic is being split out into it’s respective fabrics at the BladeCenter H.

What’s Needed

I’ll make it simple – here’s a list of components that are needed to make this method work:

- 2 x BNT Virtual Fabric 10 Gb Switch Module – part # 46C7191

- 2 x QLogic Virtual Fabric Extension Module – part # 46M6172

- a Qlogic 2-port 10 Gb Converged Network Adapter per blade server – part # 42C1830

- a IBM 8 Gb SFP+ SW Optical Transceiver for each uplink needed to your fibre fabric – part # 44X1964 (note – the QLogic Virtual Fabric Extension Module doesn’t come with any, so you’ll need the same quantity for each module.)

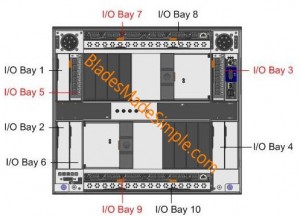

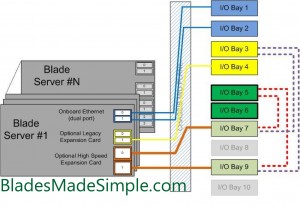

The CNA cards connect to the BNT Virtual Fabric 10 Gb Switch Module in Bays 7 and 9. These switch modules have an internal connector to the QLogic Virtual Fabric Extension Module, located in Bays 3 and 5. The I/O traffic moves from the CNA cards to the BNT switch, which separates the Ethernet traffic and sends it out to the Ethernet fabric while the Fibre traffic routes internally to the QLogic Virtual Fabric Extension Modules. From the Extension Modules, the traffic flows into the Fibre Fabric.

It’s important to understand the switches, and how they are connected, too, as this is a new approach for IBM. Previously the Bridge Bays (I/O Bays 5 and 6) really haven’t been used and IBM has never allowed for a card in the CFF-h slot to connect to the switch bay in I/O Bay 3.

It’s important to understand the switches, and how they are connected, too, as this is a new approach for IBM. Previously the Bridge Bays (I/O Bays 5 and 6) really haven’t been used and IBM has never allowed for a card in the CFF-h slot to connect to the switch bay in I/O Bay 3.

There are a few other designs that are acceptable that will still give you the split fabric out of the chassis, however they were not “redundant” so I did not think they were relevant. If you want to read the full IBM Redbook on this offering, head over to IBM’s site.

A few things to note with the maximum redundancy design I mentioned above:

1) the CIOv slots on the HS22 and HS22v can not be used. This is because I/O bay 3 is being used for the Extension Module and since the CIOv slot is hard wired to I/O bay 3 and 4, that will just cause problems – so don’t do it.

2) The BladeCenter E chassis is not supported for this configuration. It doesn’t have any “high speed bays” and quite frankly wasn’t designed to handle high I/O throughput like the BladeCenter H.

3) Only the parts listed above are supported. Don’t try and slip in a Cisco Fibre Switch Module or use the Emulex Virtual Adapter on the blade server – it won’t work. This is a QLogic design and they don’t want anyone else’s toys in their backyard.

That’s it. Let me know what you think by leaving a comment below. Thanks for stopping by!

How IBM's BladeCenter works with Cisco Nexus 5000

Other than Cisco’s UCS offering, IBM is currently the only blade vendor who offers a Converged Network Adapter (CNA) for the blade server. The 2 port CNA sits on the server in a PCI express slot and is mapped to high speed bays with CNA port #1 going to High Speed Bay #7 and CNA port #2 going to High Speed Bay #9. Here’s an overview of the IBM BladeCenter H I/O Architecture (click to open large image:)

Since the CNAs are only switched to I/O Bays 7 and 9, those are the only bays that require a “switch” for the converged traffic to leave the chassis. At this time, the only option to get the converged traffic out of the IBM BladeCenter H is via a 10Gb “pass-thru” module. A pass-thru module is not a switch – it just passes the signal through to the next layer, in this case the Cisco Nexus 5000.

10 Gb Ethernet Pass-thru Module for IBM BladeCenter

The pass-thru module is relatively inexpensive, however it requires a connection to the Nexus 5000 for every server that has a CNA installed. As a reminder, the IBM BladeCenter H can hold up to 14 servers with CNAs installed so that would require 14 of the 20 ports on a Nexus 5010. This is a small cost to pay, however to gain the 80% efficiency that 10Gb Datacenter Ethernet (or Converged Enhanced Ethernet) offers. The overall architecture for the IBM Blade Server with CNA + IBM BladeCenter H + Cisco Nexus 5000 would look like this (click to open larger image:)

Hopefully when IBM announces their Cisco Nexus 4000 switch for the IBM BladeCenter H later this month, it will provide connectivity to CNAs on the IBM Blade server and it will help consolidate the amount of connections required to the Cisco Nexus 5000 from 14 to perhaps 6 connections ;)