Last week I mentioned NPAR as a feature in most Broadcom like the ones found in the PowerEdge MX740c blade server. I realized although NPAR is nearly a decade old, it may not be well-known by readers, so I thought I’d take a few minutes to break it down for you.

With the increased amount of virtualization in the data center, combined with an increase in data and cloud computing, the network’s efficiency is becoming compromised driving many organizations to embrace a 10GbE or 25GbE network. While moving to a more robust environment may be ideal for an organization, it also brings challenges like ensuring that the appropriate bandwidth for all resources is available in both the physical and virtual environments. This is where NPAR comes in. Network Partitioning allows for administrators to split up the 10GbE pipes on the card into 4 separate partitions or physical functions and allocate bandwidth and resources as needed. (NOTE: 25GbE ports are split into 8 separate partitions.) Each of the partitions is an actual PCI Express function that appears in the blade server’s system ROM, O/S or virtual O/S as a separate physical NIC.

With the increased amount of virtualization in the data center, combined with an increase in data and cloud computing, the network’s efficiency is becoming compromised driving many organizations to embrace a 10GbE or 25GbE network. While moving to a more robust environment may be ideal for an organization, it also brings challenges like ensuring that the appropriate bandwidth for all resources is available in both the physical and virtual environments. This is where NPAR comes in. Network Partitioning allows for administrators to split up the 10GbE pipes on the card into 4 separate partitions or physical functions and allocate bandwidth and resources as needed. (NOTE: 25GbE ports are split into 8 separate partitions.) Each of the partitions is an actual PCI Express function that appears in the blade server’s system ROM, O/S or virtual O/S as a separate physical NIC.

Each partition can support networking features such as:

- TCP checksum offload

- Large send offload

- Transparent Packet Aggregation (TPA)

- Multiqueue receive-side scaling

- VM queue (VMQ) feature of the Microsoft® Hyper-V™ hypervisor

- Internet SCSI (iSCSI) HBA

- Fibre Channel over Ethernet (FCoE) HBA.

Administrators can enable/disable any of the features per partition and they configure a partition to run iSCSI, FCoE, and TCP/ IP Offload Engine (TOE) simultaneously.

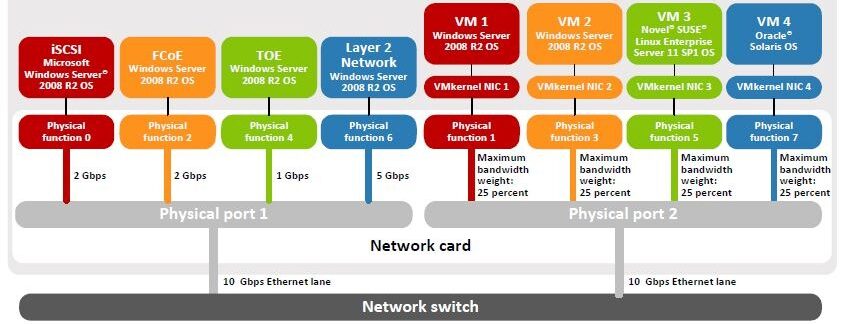

Each of the partitions per port (8 per NDC) can be set up with a specific size and a specific weight. In the example shown on the above, you see that Physical Port 1 has 4 partitions:

- Partition 1 (red) = 2Gbps, running as an iSCSI HBA on Microsoft Windows Server 2008 R2

- Partition 2 (orange) = 2Gbps, running as an FCoE HBA on Microsoft Windows Server 2008 R2

- Partition 3 (green) = 1Gbps, running TOE on on Microsoft Windows Server 2008 R2

- Partition 4 (blue) = 5Gbps, running as a Layer 2 NIC on Microsoft Windows Server 2008 R2

Each partition’s “Maximum Bandwidth” can be set to any increment of 100Mbps (or .1Gbps) up to 10000 Mbps or 10 Gbps. Also, note, this is for send/transmit only. The receive direction bandwidth is always 10 Gbps.

Furthermore, admins can configure the weighting of each partition to provide increased bandwidth presence when an application requires it. In the example above, Physical Port 2 has the “Relative Bandwidth Weight” on all 4 partitions set for an equal weight at 25% – giving each port equal weight. If, however VMkernel NIC 1 (red) needed to have more weight, or priority, over the other NICs, we could set the weight to 100% giving that port top priority.

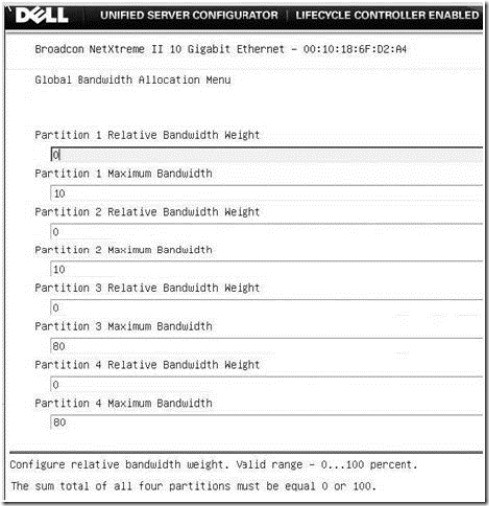

If you are feeling really adventurous, you can oversubscribe a port. This is accomplished by setting the 4 partitions of that single port to having a Maximum Bandwidth setting of more than 100%. This allows each of the partitions to take as much bandwidth as allowed as their individual traffic flow needs change – based on the Relative Bandwidth Weight assigned. Take a look at the following example screen shot from an older Dell blade server:

The example above shows each of the four partitions’ Maximum Bandwidth (shown in .1 increments so 10 = 1 Gbps)

- Partition 1 = 1 Gbps

- Partition 2 = 1 Gbps

- Partition 3 = 8 Gbps

- Partition 4 = 8 Gpbs

Total for all 4 partitions = 18 Gbps, which means the port is 80% (8 Gbps) oversubscribed.

Some additional rules to note from the NPAR User’s Manual:

- For Microsoft Windows Server, you can have the Ethernet Protocol enabled on all, some, or none of the four partitions on an individual port.

- For Linux OSs, the Ethernet protocol will always be enabled (even if disabled in Dell Unified Server Configuration screen).

- A maximum of two iSCSI Offload Protocols (HBA) can be enabled over any of the four available partitions of a single port (on 10GbE cards.) For simplicity, it is recommended to always using the first two partitions of a port for any offload protocols.

- For Microsoft Windows Server , the Ethernet protocol does not have to be enabled for the iSCSI offload protocol to be enabled and used on a specific partition.

For more information on the Network Partitioning capabilities of the Dell Network Daughter Card, check out the white paper at: Dell Broadcom NPAR White Paper

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 20 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin has worked at Dell EMC since August 2011 is a Principal Engineer and Chief Technical Server Architect supporting the Central Enterprise Region at Dell EMC. He is also a CTO Ambassador in the Office of the CTO at Dell Technologies.

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 20 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin has worked at Dell EMC since August 2011 is a Principal Engineer and Chief Technical Server Architect supporting the Central Enterprise Region at Dell EMC. He is also a CTO Ambassador in the Office of the CTO at Dell Technologies.

Disclaimer: The views presented in this blog are personal views and may or may not reflect any of the contributors’ employer’s positions. Furthermore, the content is not reviewed, approved or published by any employer. No compensation has been provided for any part of this blog.