As we near the end of VMworld, I thought I would take some time to highlight a couple of announcements that could impact the way people use blade servers with VMware vSphere.

End of the vTax – Great News for 4CPU Blade Servers

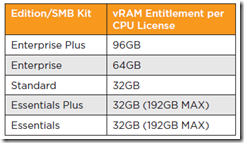

Probably the biggest announcement from VMware was the end of the vRAM entitlement. When VMware released vSphere 5, the licensing program was modified to include a limit on the amount of virtual machine memory, or vRAM, that could be used on a vSphere 5 host based on the vSphere edition purchased (see chart below for specifics.) On Monday, August 27, 2012, VMware announced the vRAM this limit would be no longer effective beginning Sept. 1, 2012. Why is this big news to customers? Let me explain.

[Previous vRAM entitlements per edition]

Since the vRAM entitlement was per CPU, customers with larger servers were impacted the greatest. For example, a customer running VMware vSphere 5 Enterprise Plus edition with a blade server with 4 CPUs was capped at 384GB of vRAM (64GB x 4 CPUs). Now at first, that seems reasonable, but once you look deeper, it was a huge limitation. The Intel Xeon E5-4600 based servers have 4 memory channels and 48 DIMM slots, therefore in order to reach 384GB of RAM, while keeping the memory balanced, you need to:

a) use 48 x 8GB DIMMs – uses 3 DIMMs per channel (DPC) and leaves no room for expansion

b) user 16 x 16GB DIMMs plus 16 x 8GB DIMMs – which uses 2 DPC and leaves room for expansion, but costs more.

If this customer wanted to bump up to 512GB, they would have to buy two more vSphere 5 Enterprise Plus licenses which would have entitled them to have 576GB of vRAM (6 CPU licenses x 96GB) adding an additional $6,990 ($3,495 per license U.S. List) of licensing cost per host, excluding Support and Subscription (SnS.)

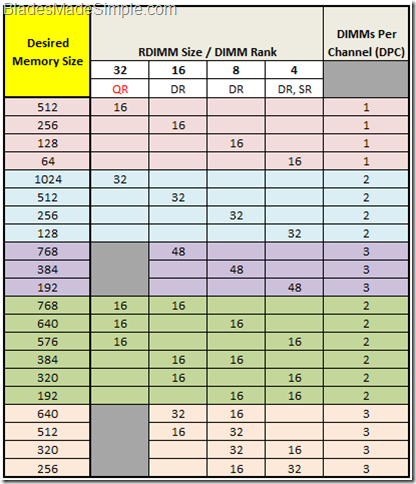

With the removal of the vRAM entitlement from VMware vSphere licensing, customers now have the flexibility to run as many virtual machines as they need taking advantage of all the physical memory. Less licenses and the freedom to choose any memory configuration (see below) that they may need.

[Balanced Memory Configurations for Blade Servers with Intel E5-4800 CPUs]

Enhanced vMotion – a penalty for blade servers?

The last announcement that I’ll mention is around the release of VMware vSphere 5.1. One of the most talked about new features within Sphere 5.1 is “Enhanced vMotion” which in summary gives users the ability to migrate virtual machines from host to host without a storage network. This is a great feature for small environments who want to have vMotion mobility without sharing storage, as it allows for the use of internal server storage instead of external shared storage. Architecturally, rack or tower servers is a more suitable server platform for customers who value using vMotion with local storage since they utilize anywhere from 16 – 32 internal drives whereas a blade server has 2 – 4 drive bays. Of course, users could take advantage of storage blades (which sit in the blade chassis along with the blade servers) but they are typically iSCSI based, not direct attached, and would therefore be considered a storage network. In reality, environments that would benefit from using Enhanced vMotion are probably not ideal for blade servers anyway, but only time will tell if it impacts blade server sales.

For more information about all of the features of VMware vSphere 5.1, check out Jason Boche’s blog at http://www.boche.net/blog/index.php/2012/08/27/vmworld-2012-announcements-part-i/

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 15 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin works for Dell as a Server Sales Engineer covering the Global 500 Midwest and East markets.

There are certainly benefits to blade customers for the new vMotion functionality. Picture some hot VMs that might want to sit on SSD but don’t need the availability of shared storage or for which you don’t want the traffic to go over your storage networks – we’ve got hundreds of these in batch processing farms. Now pop SSDs (or FusionIO mezzanine cards) into your blades and configure them as local datastores. In 5.0, you can’t put the blade into maintenance mode without powering off the VMs. In 5.1, we can vMotion these guests to other blades for host patching or other maintenance.

Great comments on the enhanced vmotion announced at #vmworld. It will be interesting to see if customers use blade servers in this way. Thanks for the feedback!

Pingback: Kevin Houston

Pingback: Rafael Knuth

Pingback: Dell Tech DE

Pingback: Kong L. Yang

Pingback: Kong L. Yang

Pingback: Jeff Sullivan

Pingback: Ed Swindelles

Pingback: Dennis Smith

Pingback: 大植 吉浩

Pingback: Elijah Paul

I can’t see much case of vSphere 5.1 Enhanced vMotion with blades, I think an in-chassis iSCSI SAN using storage blades make more sense when using blades in branch offices or for small, isolated deployments. I do think Enhanced vMotion will provide a nice option for a pair of rack mount or pair of tower servers in a branch office.

The big loser with vSphere 5.1 Enhanced vMotion will be Nutanix. This kills a big part of their value proposition. Once VMware adds intelligent caching of flash devices (no doubt adapted from parent EMC’s Project Thunder), Nutanix’s value prop will be available to any VMware host.

Kevin,

The vRAM is dead,which is great news !

Yet I have a problem with your wording of “capped at

384GB”

The vRAM is a limit of assigned memory to VMs and not

physical memory.

If I had that same 4 cpu blade you mention above, but

instead I had 1TB of ram (that makes a lot of dimms). I would not violate the

vRAM if I ran a single 512GB virtual machine. VMs over 96GB memory are counted as only 96GB vRAM. I admit it’s not VMs that we see often…

People that buy too many vSphere licenses while they don’t need it, is profit for VMware, but it’s solely the fault of the consultant/reseller/distributor because they didn’t understand vRAM in their client’s environment.

More than once, I had clients asked for 2 cpu blades with 128GB, because they had 2 vSphere Enterprise licenses (64GB of vRAM). They really can purchase blades with 196GB or 256GB !

Pingback: Jaime Eduardo Rubio

Pingback: Stephen Foskett

Pingback: Kevin Houston

Pingback: Adolfo Bolivar

Pingback: Stephen Foskett

Pingback: Joe Abusamra

Pingback: Kevin Houston

Pingback: Ed Swindelles