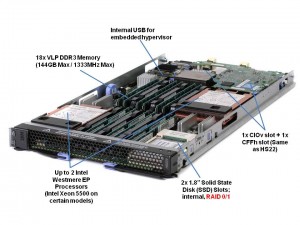

IBM officially announced today a new addition to their blade server line – the HS22v. Modeled after the HS22 blade server, the HS22v is touted by IBM as a “high density, high performance blade optimized for virtualization.” So what makes it so great for virtualization? Let’s take a look.

Memory

Memory

One of the big differences between the HS22v and the HS22 is more memory slots. The HS22v comes with 18 x very low profile (VLP) DDR3 memory DIMMs for a maximum of 144GB RAM. This is a key attribute for a server running virtualization since everyone knows that VM’s love memory. It is important to note, though, the memory will only run at 800Mhz when all 18 slots are used. In comparison, if you only had 6 memory DIMMs installed (3 per processor) then the memory would run at 1333Mhz and 12 DIMMs installed (6 per processor) runs at 1066Mhz. As a final note on the memory, this server will be able to use both 1.5v and 1.35v memory. The 1.35v will be newer memory that is introduced as the Intel Westmere EP processor becomes available. The big deal about this is that lower voltage memory = lower overall power requirements.

Drives

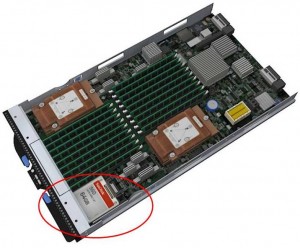

The second big difference is the HS22v does not use hot-swap drives like the HS22 does. Instead, it uses a 2 x solid state drives (SSD) for local storage. These drives have hardware RAID 0/1 capabilities standard. Although the picture to the right shows a 64GB SSD drive, my understanding is that only 50GB drives will be available as they start to become readlily available on March 19, with larger sizes (64GB and 128GB) becoming available in the near future. Another thing to note is that the image shows a single SSD drive, however the 2nd drive is located directly beneath. As mentioned above, these drives do have the ability to be set up in a RAID 0 or 1 as needed.

does. Instead, it uses a 2 x solid state drives (SSD) for local storage. These drives have hardware RAID 0/1 capabilities standard. Although the picture to the right shows a 64GB SSD drive, my understanding is that only 50GB drives will be available as they start to become readlily available on March 19, with larger sizes (64GB and 128GB) becoming available in the near future. Another thing to note is that the image shows a single SSD drive, however the 2nd drive is located directly beneath. As mentioned above, these drives do have the ability to be set up in a RAID 0 or 1 as needed.

So – why did IBM go back to using internal drives? For a few reasons:

Reason #1 : in order to get the space to add the extra memory slots, a change had to be made in the design. IBM decided that solid state drives were the best fit.

Reason #2: the SSD design allows the server to run with lower power. It’s well known that SSD drives run at a much lower power draw than physical spinning disks, so using SSD’s will help the HS22v be a more power efficient blade server than the HS22.

Reason #3: a common trend of virtualization hosts, especially VMware ESXi, is to run on integrated USB devices. By using an integrated USB key for your virtualization software, you can eliminate the need for spinning disks, or even SSD’s therefore reducing your overall cost of the server.

Processors

So here’s the sticky area. IBM will be releasing the HS22v with the Intel Xeon 5500 processor first. Later in March, as the Intel Westmere EP (Intel Xeon 5600) is announced, IBM will have models that come with it. IBM will have both Xeon 5500 and Xeon 5600 processor offerings. Why is this? I think for a couple of reasons:

a) the Xeon 5500 and the Xeon 5600 will use the same chipset (motherboard) so it will be easy for IBM to make one server board, and plop in either the Nehalem EP or the Westmere EP

b) simple – IBM wants to get this product into the marketplace sooner than later.

Questions

1) Will it fit into the BladeCenter E?

YES – however there may be certain limitations, so I’d recommend you reference the IBM BladeCenter Interoperability Guide for details.

2) Is it certified to run VMware ESX 4?

YES

3) Why didn’t IBM call it HS22XM?

According to IBM, the “XM” name is feature focused while “V” is workload focused – a marketing strategy we’ll probably see more of from IBM in the future.

That’s it for now. If there are any questions you have about the HS22v, let me know in the comments and I’ll try to get some answers.

For more on the IBM HS22v, check out IBM’s web site here.

Check back with me in a few weeks when I’m able to give some more info on what’s coming from IBM!

Pingback: Blades Made Simple · Introducing the IBM HS22v Blade Server | All About Solid State Drives (SSD)

Pingback: uberVU - social comments

HP 490C blade server which this IBM server is based on has been around for 18 months now. Nice to see IBM finally trying to catch up!!Yawn…

Pingback: Tweets that mention Blades Made Simple · Introducing the IBM HS22v Blade Server -- Topsy.com

Pingback: Kevin Houston

Pingback: Kevin

Pingback: unix player

True, however the HS22v will work in IBM's older chassis. The HP 490c won't work in the p-Class BladeSystem chassis.

So, P class was retired in 2007, C class has been around since in 2 options – C3000 and C7000, IBM have had how many revisiosn of chassis? how many were over 2 years late to market? mmm… C7000 and C3000 cater for EVERY server in the HP blade portfolio – 2 and 4 socket, Intel, AMD and Itanium, along with storage blades and tape blades. HP dont have to add an additional blade to an existing blade to get hot plug HDD's in the server, we have 10Gb ethernet standard on the servers, including Virtual Connect. HP's power and cooling does not negatively affect the entire chassis if there is ever a fan failure etc etc. HP dont want to put new technolgy in a chassis that is 4 years old and obsolete ( and was around for 5 years). P class served as HP's intro into blade technology – technology has moved on since as have HP blades…

First off – thanks for the debate. I appreciate you reading and taking the time to offer your thoughts and opinions. I can not argue with your points – all good ones, however for customers that still own older chassis' they don't care about the politics or the market differences between HP and IBM, they simply care that this “new” blade will work in their chassis. While IBM's architecture may be 8 years old, it still works for those customers.

Why would you want to put one of these blades in an old Bladecenter E chassis? You lose the ability to use high speed interconnects. Without high speed interconnects this system won't have enough bandwidth to be a good virtualization platform. So while it might fit in an E enclosure, it really isn't usable for it's intended purpose.

In addition the BL490c can support 192GB using 16GB DIMMS. IBM blades use very low profile memory because they are too thin to support standard DIMMs, so it can't currently use 16GB DIMMS.

Thanks for reading – you bring up a great point that the BladeCenter E doesn't allow for high speed interconnects, or for more than 2 redundant I/O fabrics. Can't really argue with that one. While the BL490 will handle the 16GB DIMMs, I'm not seeing any of customers willing to pony up $2k per 16GB kit. PLUS – with the BL490 you can only use 12 of the 18 DIMM slots if you want to use 16GB DIMMs and reach 192GB max capacity. SO – why would you buy the BL490 when you could buy the BL460 and achieve the same max memory capacity? Keep in mind, I'm not bashing the BL490 at all (you'll notice no bashing in my blog.) I love HP and IBM – I just wanted to inform everyone about the IBM HS22v and it's benefits.

Pingback: IBM System x

Pingback: B2BCliff

Pingback: Josh Axelson

Pingback: Joe Abusamra

Pingback: Blades Made Simple · Introducing the IBM HS22v Blade Server | VirtualizationDir - Top Virtualization Providers, News and Resources

Pingback: Gereon Vey

bladeguy – The 16GB DIMM feels like a product that everyone at HP will talk about and say they have it becuase no one else does but at the end of the day, few will buy it. It is too expensive, a quad rank DIMM, at a slower 1066 Mhz and the 490 will only support 12 of them instead of 18. Why even put them in a 490 when a 460 will work as well?

The 16GB is a product that is all dressed up with nowhere to go. We need faster, cheaper DIMMs and blades with more sockets that can use them instead.

Don' tget me wrong, I love the HP products and I sell a bunch of them but I don't like the messaging behind the 16GB DIMM.

Aaron – I completely agree. I appreciate your feedback and for reading. It's good to have a good debate between IBM and HP. Thanks, Kevin

Does IBM HS22v support 2-port 10 Gb/s Ethernet interface on board as HP BL490c G6 does? Is it capable to be divided into 8 channels like Flex-10 from HP? Till now everyone was coping IBM, at the moment “Big Blue” is copying the blades from the “printer manufacturer”. :-)

IBM's HS22v does support 10Gb and CNAs, however not on the motherboard, only in the expansion card slots. IBM does not yet have the ability to have onboard 10Gb or CNAs. That feature will require a new chassis, as the current chassis doesn't support high speed connectivity in Bays 1 and 2 (where the blade server motherboard NICs are mapped.) Thanks for reading!

That's was my point in discussion regarding keeping old enclosures or changing them to new ones. Despite occupying precious I/O slot. And no comments regarding Flex-10 functionality? Especially in the environment designed for virtualization, where throughput, but also the number of physical ports matters.

HP's current design using Flex-10 requires an additional pair of fibre switches for fibre traffic. This isn't the case with IBM's approach of using CNA's + Integrated Cisco Nexus 4000i (https://bladesmadesimple.com/2009/10/officially-…). Thanks for the dialog!

While I agree putting newer blades in an older chassis does not realise the full potential of the blade, it gives you options. We have been using IBM blades since they were introduced, and therefore have a spread of chassis.

We work to limited budgets and timeframes, so in the real world sometimes we can't have the latest and greatest. When we do, we will often move replaced hardware, such as a chassis into a lesser roll (such as DR). It makes life so much easier to have flexibility in this area.

To give an example of where this has helped us, we currently run our production vmware cluster on 8 blades in a H chassis. We recently ran into a problem at our site, and we were able to place the blades into our DR site with an older chassis and they just worked. Yes, not perfect and we needed to do some network changes, but we kept the business going and in the end, that's what counts for us.

Thanks for the comment on the HS22v post – I really appreciate the real world example. Many times we focus too much on the marketing aspects of the technologies and often overlook how it affects “real people.” Thanks, again!

Its a dramatization, but I'd be interested to see if there is a response from HP… http://www.youtube.com/watch?v=xOb-QfGVVNo

Flex 10 vs IBM Virtual Fabric – great video! Thanks for sharing and for reading.

Krysztof – The 10GB LOM's by HP don't bring much to the table. Again, we aren't comparing valid stats just like the memory. Sure you have 2x10GB LOM's and you can add a card for an additional 2x10GB connections. Does anybody NEED more than 4x10GB???? Probably not. IBM has a 4x10GB card that will give you 4x10GB ports on a single card.

My problem with all the HP slamming here is no one seems to understand how the IBM vs HP really works. At the end of the day they are very close to identical. HP doesn't have a very large advantage in the technology in the market today vs. IBM.

As for the whole Flex 10. You can do it on IBM with the vNIC card and a BNT based 10GB switch. I haven't seen it yet so I can't comment on it but IBM does offer something similar.

The whole on-board vs. a mezz card isn't a valid argument because at the end of the day you can get to 4x10GB ports on either solution.

Pingback: VMware PEX 2010: News And Announcements | VM /ETC

Pingback: Blades Made Simple · Introducing the IBM HS22v Blade Server | Drakz Free Online Service

The question once again is: “Is this the last gasp for the venerable IBM BladeCenter?” Just when it seems IBM has squeezed all it can out of this form factor, it does a little bit more. The very low profile DIMMs solves the 18 DIMM slot capacity problem, which IBM needed to get solved before Westmere. I don't know the price per GB of VLP compares to standard DIMMs.

Somebody mentioned HP supporting hot-plug disks, but the 490/495 disks are internal non hot-plug.

Ultimately, the same blade real-estate problems which have been hitting IBM for several years, and hit HP with the 18 DIMM slot models, will become a bigger issue for both IBM and HP. After Westmere will be 8-core processors, or perhaps dual-socket Nehalem-EX configurations, with more memory channels.

Kevin – I found something interesting late this week. There is another option besides the SSD for this server. There is also an ESXi key that is only $75 compared to over $1600 for the SSD drive (or double if you want 2x SSD's). The ESXi key isn't in the normal place in the IBM tool (it is in the Other section, not the storage section) and I haven't seen any info about it pre-release like I usually would on the IBM Business Partner information. More information over at my site:

http://blog.aarondelp.com/2010/02/buying-hs22v-…

Mark – My only comment about the future of IBM is wait and see. There are some really big announcements coming up with the next generation of Intel procs that will be very nice! IBM will be doing some things that will make them very different.

IBM really fell asleep at the wheel with Nehalem but I'm hoping they will be competitive again with the next version.

On the HS22v the internal USB port allows for an integrated ESXi key to be used. I mentioned this under “reason #3” under the Drives section on my post. I've used the ESXi USB key with an IBM HS22 blade server and it's really slick. I can't think of any reason someone would need local drives (physical or SSD) when using the ESXi USB key. Thanks for the comments – glad this post is creating good conversation.

Mark – thanks for the comments. It will be interesting to see what happens in the next 24 months with all blade vendors, as the CPU and memory architectures are continuing to evolve very quickly. I've seen the Nehalem EX design for IBM's blades and all I can say is that IBM will regain some market share. Thanks for reading!

Pingback: Blades Made Simple » Blog Archive » More Blade Server Rumours

Pingback: More Blade Server Rumours « BladesMadeSimple.com (MIRROR SITE)

Pingback: Kevin Houston

I have 4 blade servers HS22V in BAY1, 2, 3, 4 on chassic. I run ESXi on servers, After config VMkernel on host 3 fail, host 3 can’t connect to network. I check the blade and i found that INT3 not in Mgmt VLAN 4095. In VLAN 4095 i don’t see INT3 available to add to VLAN 4095. Please help me to reconfig it. Thanks you!

Speed vs Capacity dilemma

I have 4 HS22v blades configured with 12x8gb memory.

I am thinking upgrade

the memory with some 16GB DIMMs.

My question is will it be better to use all 18 slots and

have more capacity @800Mhz or replace the 8GB DIMMS with 16GB DIMMs and keep the speed @1066Mhz?

Has anyone had an issue with HS22v

blades with mixed memory 8gb and 16gb DIMMs?